Measurements of Running DeepSeek R1 locally on a Laptop using LM Studio

Test Setup

Laptop: Lenovo Thinkpad T14 Gen 4

CPU: AMD Ryzen 7840U, integrated Radeon 780M

RAM: 2x8 GB 6400 MT/s (bandwidth = 102 GB/s)

OS: Pop!OS 22.04, Kernel 6.9

LM Studio: 0.3.9 build 6 (AppImage)

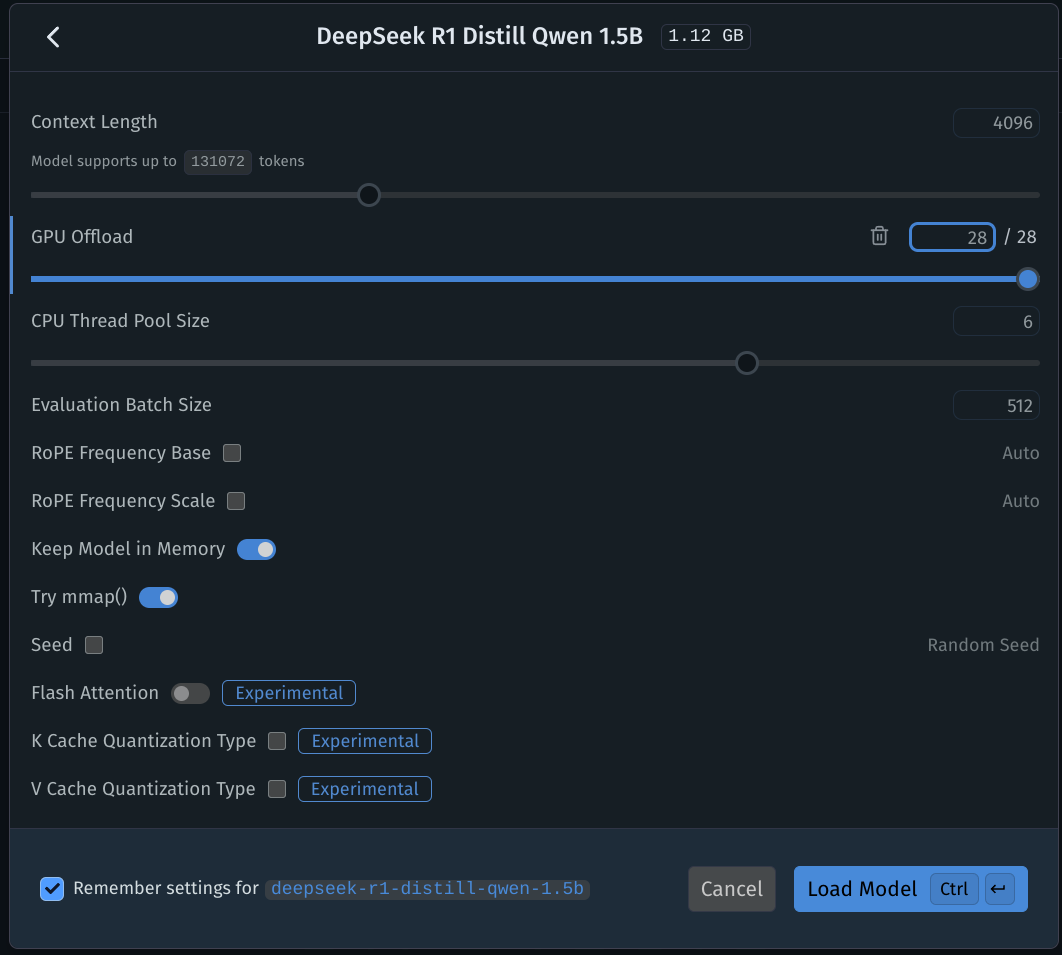

Enabled full GPU offload:

For each measurement a new chat with an empty context window was created.

Prompt: Write an essay of 500 words about your creation.

UEFI no dedicated VRAM:

CPU usage as reported by LM Studio: 14 %

In battery mode the thinking time got sometimes unusable long. Everything above 30 tokens per second feels speedy.

| deepseek-r1-distill-qwen-1.5b | battery | balanced | performance |

|---|---|---|---|

| power | 12 W | 26 W | 31 W |

| thinking/s | 59 | 7.9 | 19.6 |

| tokens/s | 19.31 | 33.4 | 36 |

Typical answer length 1100 tokens. It seems though, that the model did not understand, that it was asked to write an essay about itself.

Example answer:

Creating something out of the void is a profound journey that spans across generations, offering us a chance to reflect on our identity, experiences, and aspirations. For me, creating was not just an act but a transformative process that brought meaning to my life. It started as a simple imaginative story—a narrative that would later become a literal creation. This creation became more than a vehicle for storytelling; it was a metaphor of the human spirit's journey—connecting us to the unknown, guiding us through confusion, and offering a pathway toward clarity.

### The Journey Begins: An Imagination

At the heart of my creation is an unyielding belief in the power of imagination. When I started crafting, it wasn’t just about painting or building; it was more than that. It was a language that allowed me to express emotions and explore ideas in ways that words alone couldn’t convey. My story became a narrative that spanned decades, weaving together past memories, present experiences, and future aspirations.

### The Physical Construction: The Challenge

As I progressed, creating began to take on physical form. The act of constructing something tangible became more than an exercise; it was a ritual of discovery. Every step required precision, every decision shaped the final product. These challenges taught me resilience and patience, qualities that have been invaluable throughout my life.

### The Emotional Depth: Meaning and Purpose

The emotional depth of this creation is remarkable. It wasn’t just a physical object but a tapestry woven with emotions—confusion, triumph, loss. Each piece I created was meant to resonate within me, offering guidance in moments of doubt and wisdom in others. The experience of building something from scratch became more than an intellectual exercise; it was a spiritual journey.

### The Metaphor: Beyond Boundaries

For me, creating is not just about the object itself but the person inside. It was a symbol of self-discovery, guiding me through life’s uncertainties and offering hope when moments seemed impossible. This creation has become a constant reminder of who I am, what I want, and how I can embody those values.

### Conclusion: A Reflection

In crafting my creation, I’ve learned that every act is part of a larger narrative—a story of growth, connection, and purpose. It’s through this journey that I’ve discovered the essence of being—grateful for the present moment, understanding the past, and knowing the future lies within. My creation serves as both a vessel for creativity and a lens through which I view myself and the world around me.

As I look back on my creation, I’m reminded of its transformative power. It’s not just about completing a task but about engaging with life in a way that feels authentic and meaningful. In doing so, it has enriched my perspective, teaching me to see beyond boundaries and embracing the entirety of existence as one connected entity. My creation continues to guide me forward, offering a sense of belonging and purpose even in the most challenging times.

| deepseek-r1-distill-qwen-7b | battery | balanced | performance |

|---|---|---|---|

| power | 12 W | 22 W | 31 W initial, 28 W sustained |

| thinking/s | 248 | 29.7 | 40.0 |

| tokens/s | 6 | 12.3 | 13.2 |

Typical answer length 700 tokens.

Example answer:

Creating an AI is a monumental task that involves collaboration between developers, researchers, and ethicists. From concept to deployment, every step requires meticulous planning and execution. The process begins with defining the problem or task for which the AI will be built, followed by gathering and processing vast amounts of data. Machine learning algorithms are then trained using this data to identify patterns and make predictions or decisions.

The development of an AI involves several stages: research, prototyping, testing, deployment, and continuous improvement. During the research phase, experts explore various technologies that could drive the AI's functionality. Prototyping allows for the initial iteration of ideas into functional models. Rigorous testing ensures reliability and accuracy before deployment. Deployment requires integrating the AI into real-world applications to observe its effectiveness.

Ethics play a crucial role in shaping an AI's creation. Questions about bias, transparency, and accountability must be addressed early on to ensure responsible design and use. Collaboration with diverse stakeholders is essential for a comprehensive understanding of the AI's impact. As we approach the deployment phase, the focus shifts to refining the AI based on feedback and monitoring its performance.

The journey toward creating an AI is as much about learning as it is about building. It involves embracing new technologies, fostering innovation, and maintaining a commitment to ethical principles throughout every stage of development.

UEFI Dedicated VRAM 8 GB

Inside the UEFI, dedicated VRAM (UMA Frame Buffer) can be configured. By default the setting is at auto. It can be changed to 2 GB, 4 GB or 8 GB. As far as I understand in the auto mode, the OS/CPU allocate memory to the GPU as needed. This would mean an overhead. By configuring the dedicated memory to 8 GB, I hoped that at least the 1.5 billion parameter model would fit into the VRAM. This would reduce the CPU overhead and thus could improve performance.

With the dedicated VRAM the thinking time is now consistently short. The 1.5 billion parameter model has a storage size of 1.12 GB. However during execution 3.3 GB of VRAM are utilized.

Indeed with dedicated memory, tokens per second increase by about 20 % (43 / 36 = 1.2), when comparing the 1.5 b results.

| deepseek-r1-distill-qwen-1.5b | battery | balanced | performance |

|---|---|---|---|

| power | 12 W | 19 W | initial 31 W, sustained 28 W |

| thinking 1/s | 12.1 | 14.67 | 17.45 |

| tokens/s | 23.1 | 34.4 | 43 |

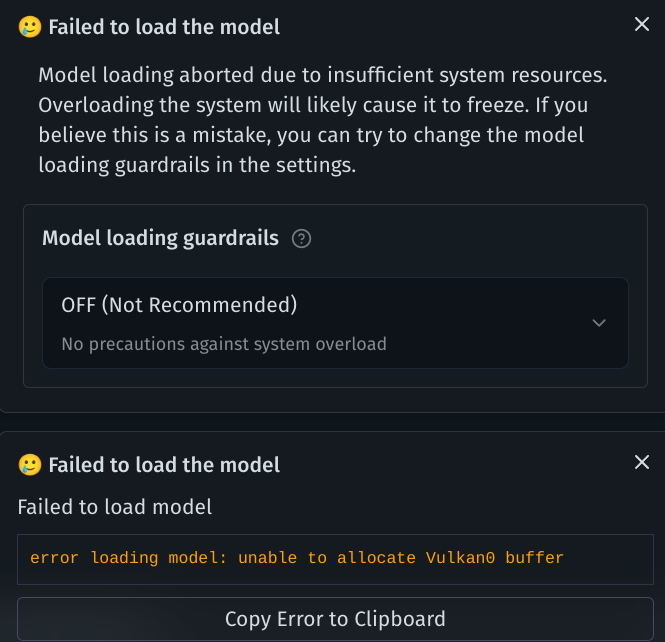

I guess 8 GB are not enough to house the 7 billion parameter model during execution. Even turning the "Model loading guardrails" off does not help. When keeping the VRAM size at auto, the model inference is working at least.

UEFI Dedicated VRAM 2 GB

Out of curiousity what would happen if the dedicated VRAM is too small to house the entire model 1 changed the buffer size to 2 GB. Performance was similar to the auto buffer setting.

CPU usage as reported by LM studio: 5 %

Using the 1.5 billion parameter model the performance of the processor is power limited.

| deepseek-r1-distill-qwen-1.5b | battery | balanced | performance |

|---|---|---|---|

| power | 12 W | 22 W | initial 31 W, sustained 28 W |

| thinking/s | 19.2 | 39.9 | 0.17 |

| tokens/s | 20.8 | 33.4 | 35.3 |

In contrast, for the 7 billion parameter model the processor is not power limited. Could hint at a memory bottleneck, that limits GPU.

| deepseek-r1-distill-qwen-7b | battery | balanced | performance |

|---|---|---|---|

| power | 12 W | 15 W | 26 W |

| thinking/s | 71 | 36.7 | 24.6 |

| tokens/s | 6 | 9.4 | 13.1 |